Portal26’s State of Enterprise Tokenization Report

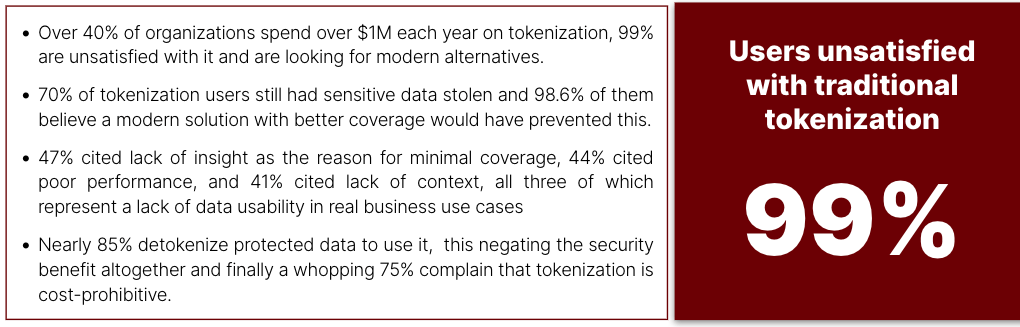

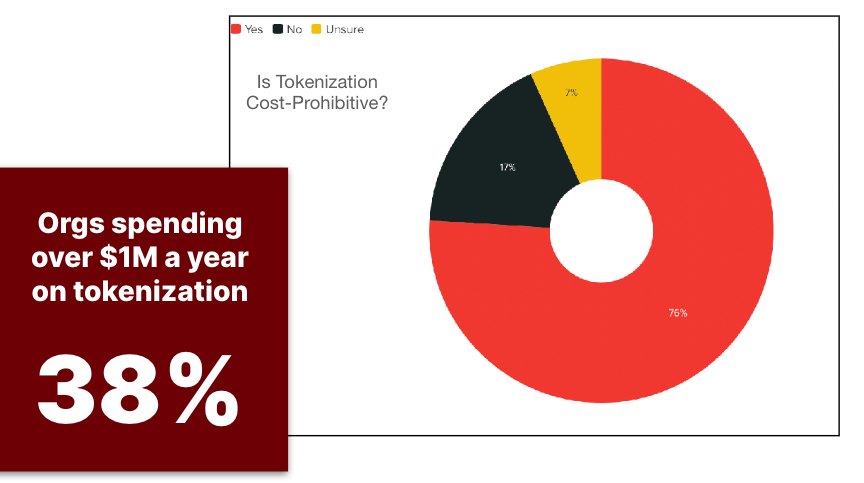

Portal26’s State of Enterprise Tokenization Report for 2022 finds that 40% of enterprises spending jaw-dropping sums of over 1M per year on tokenization, 70% of them complain of inadequate coverage and compromised data resulting in a whopping 98% of enterprises being ready to embrace a more modern solution that provides strong security with better coverage and without usability tradeoffs.

Executive Summary

Ever since its introduction in 2001, Tokenization has remained and indispensable tool in the enterprise security toolbox. When sensitive data is tokenized, the original data is replaced with an unrelated and randomly generated token (in the case of Vaulted tokenization), or a cryptographically generated one (in the case of Vaultless tokenization). With the original data not being present in applications and databases, attackers breaking into these systems are unable to access anything of value. Tokenization serves not just a security purpose, but also speaks to privacy and compliance use cases.

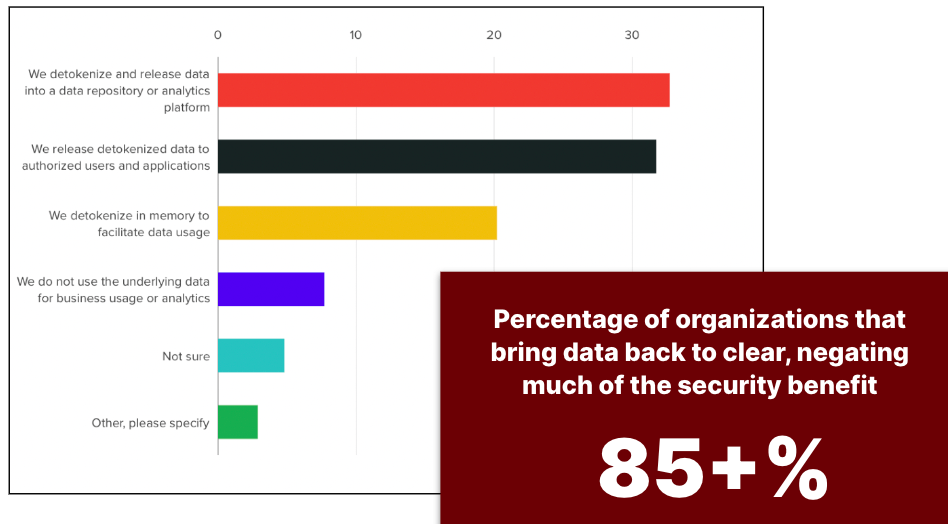

It is no surprise then that enterprises are willing to go to great lengths to implement tokenization solutions, despite the extremely disruptive and resource intensive nature of traditional tokenization solutions. Beyond just the time and effort to deploy, tokenization also presents an extremely high cost in terms of business data use, essentially eliminating all rich data usage options. In its tokenized form data cannot be searched or analyzed for insight. For it to be used in an insightful way, the data must be detokenized and released right back into its original vulnerable clear text state. Some traditional tokenization solutions detokenize in memory while others release clear text into downstream analytical processes, thus limiting the overall security benefit.

However, despite having been around for over 20 years, the real impact of tokenization has been limited to payment card data and select few fields beyond that. Anytime enterprises deal in data that is truly required for insight, analytics, or rich search, tokenization fails to provide coverage. As a result we continue to see large scale breaches and sensitive data compromises in enterprises that invest millions annually into traditional tokenization solutions.

At Portal26, we understand that what enterprises really need is the strength of tokenization without the tough tradeoffs of the past. Our product suite delivers this and we invite you discover the power of a modern data security platform that speaks to both strong security as well as data centric decision making at near real-time speeds.

In order to get first hand data on enterprise sentiment regarding current tokenization solutions as well as their asks of modern solutions, Portal26 commissioned an independent third-party to conduct a study on this topic. In this original research study covering 104 enterprises, we uncovered the true state of tokenization in enterprises. We present our findings in this report.

Ultimately, the data showed that enterprises are tired of spending enormous amounts to protect a small portion of sensitive data and are ready for a better answer! We offer this data to you, our readers, so that you have the information you need to make a strong case for improving data security in your organization. As always, please feel free to write with questions or comments.

Summary of Study Participants

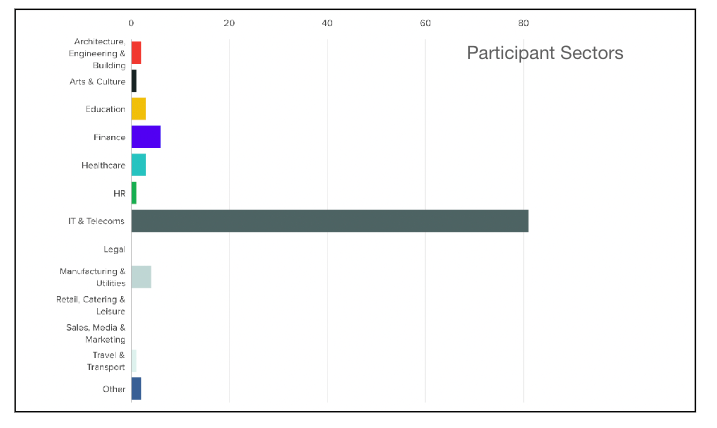

Portal26’s State of Tokenization Study included 104 participants across the United States from a variety of industries. Participants were all Security professionals. We requested a wide distribution cross regions and cities and participation definitely reflected this. See chart below for a breakdown of industries covered.

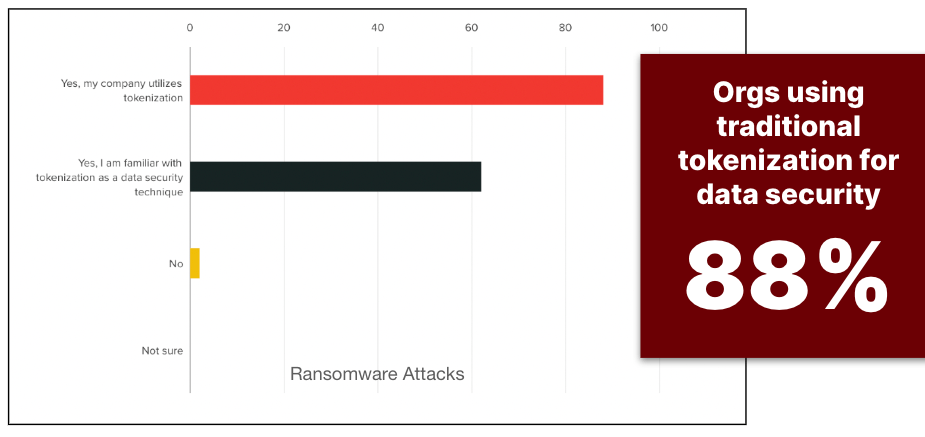

Tokenization is Widely Used and Well Understood

The study found that over 80% of surveyed companies utilize tokenization confirming our understanding of the prevalence of tokenization in a large majority of security conscious enterprises. The study also confirmed that there is a widespread understanding one how tokenization works with over 60% being familiar with how it works.

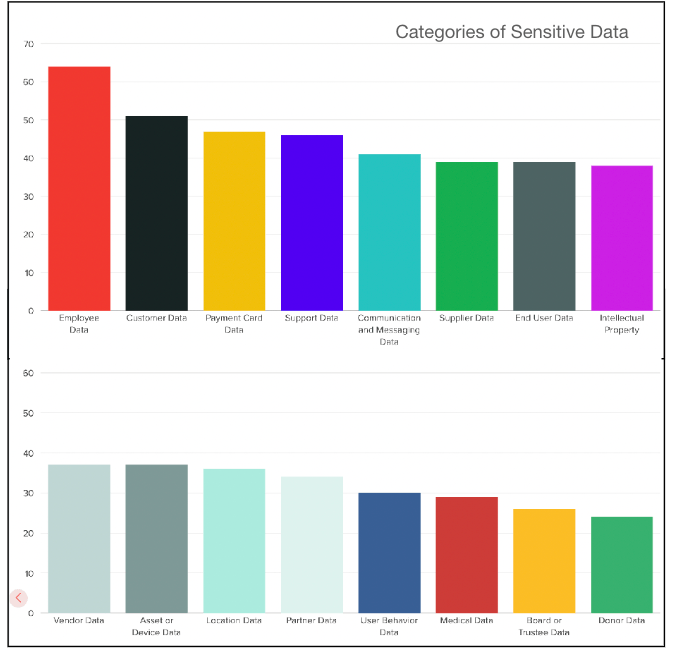

Enterprises House a Wide Spectrum of Sensitive Data

With the chart below depicting all the categories of sensitive data that participating enterprises would like to protect, it is easy to see why tokenization might fall short in its ability to provide adequate coverage. For a modern data security strategy to be effective, it would need to account for the variety of data and all the business process around this sensitive data. The Y- Axis represents the number of companies out of a total of 104 participants.

Seeing the large variety of data that needs to be protected, we were curious about how companies went about protecting this data. In particular, as it relates to tokenization, we were interested in whether it was a helpful technique to protect these categories and if not, what prevented enterprises from utilizing tokenization as a data security tool in this regard.

Separately we were also interested in learning what happens to data that is tokenized but eventually required for analytics or other type of in-depth business usage.

For these reasons, we set up the survey to ask a series of questions about this data that would shed light on both actual coverage as well as effectiveness of tokenization as a data security control in the enterprise. The next few questions address this area.

Even Though it is Widely Used, Tokenization Fails to Provide Coverage

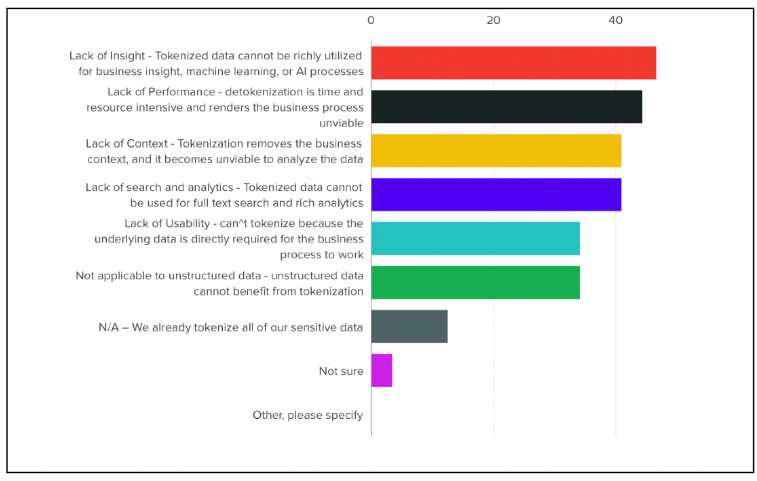

The study found that even though over 80% of participants use tokenization, they are unable to use it to protect all the data that truly needs protection. When asked about what specific limitations they face, 47% cited lack of insight as the reason for minimal coverage, 44% cited poor performance, and 41% cited lack of context, all three of which represent a lack of data usability in real business use cases. The chart represents % responses.

Data that is Tokenized Still Ends up in the Clear

Enterprises Spend Millions Despite Dismal Data Coverage

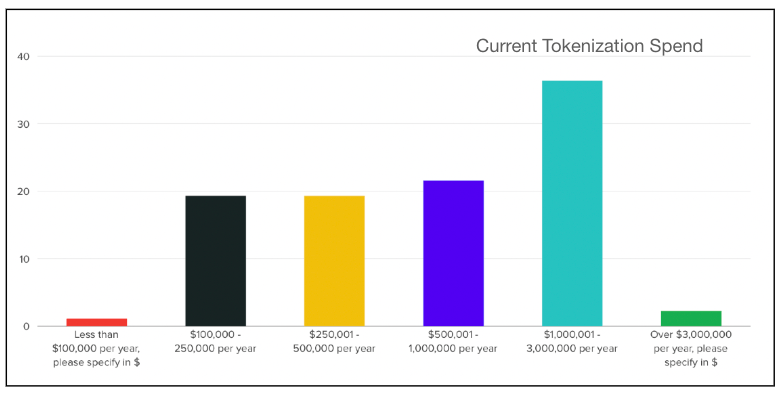

Study participants revealed that a majority pay millions for traditional tokenization while still not gaining meaningful data protection coverage. With 36% spending between $1M and $3M and an additional 2% spending over $3M each year, it is no surprise that enterprises are ready for better ROI. The chart below represents % data.

When asked the same question another way, enterprises responded by saying tokenization is cost-prohibitive. This confirmed our anecdotal understanding that while tokenization works well by simply removing data, it fails when the underlying data is truly required, it becomes less and less useful as sensitive data categories increase, and in light of all that, enterprises find the cost of tokenization to be a heavy burden.

Enterprises Want Better Features and Fewer Tradeoffs

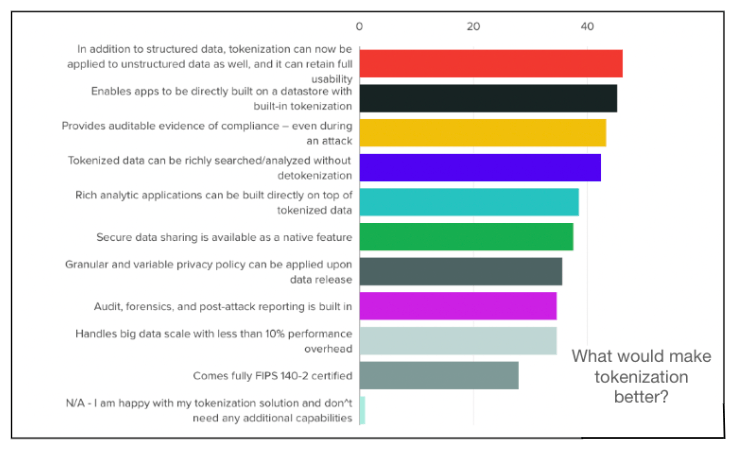

The Study polled participants on what features would improve their tokenization experience. The survey presented modern data protection capabilities that make “Next Gen Tokenization” dramatically different in its coverage, usability and performance. Examine the chart below for features that received the most votes. Notice that only 1% of participants are happy with their current tokenization solution.

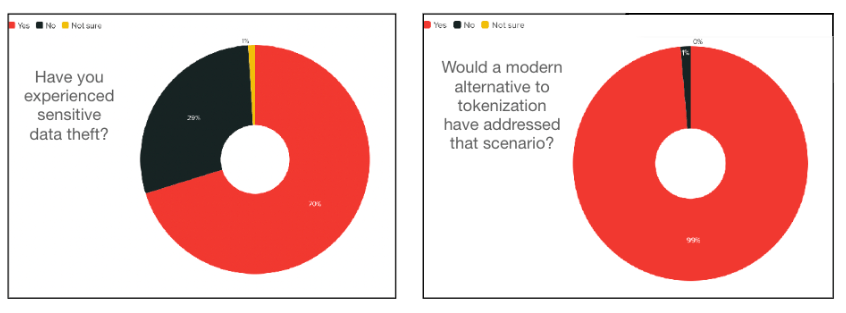

Our final question asked users if they experienced loss of sensitive data despite their investment in tokenization. We also asked whether they believe a more modern solution such as one with features described above would, in their opinion, prevent such compromises in the future.

Answers confirmed our suspicion that as data usage explodes and enterprises move into the era of data driven decision making, we need data protection solutions that deliver strong security without getting in the way of business. Traditional tokenization is not the right answer. Enterprises need to look to “Next Gen” solutions that address traditional limitations and provide superior alternatives. Portal26 offers an answer and we invite you to explore our solution.

Learn More About Next Gen Tokenization for Data Intensive Organizations

Portal26 Vault is the industry’s most advanced vaulted and vaultless tokenization solution delivering all the benefits of tokenization without the severe data usability and performance restrictions that organizations have had to live with in the past.